Over the last six months, the world has experienced the hasty adoption of technological substitutes to prevent a pandemic blues. Virtual workshops, seminars, digital twins are now the norm. With AR / VR expected to ride the next big wave of developments, it is essential to consider their present state of operation in the domain of the physical world.

AI’s future assistants need to navigate efficiently, look around their external surroundings, listen, and build memories of their 3D space. The field of embodied AI deals with the study of intelligent systems with a physical or digital personification. The idea here is that intelligence emerges from the interaction of the agent with the environment as a result of the sensory-motor activity.

For Super Realistic Acoustics

The team has released an audio-visual tool that research teams can use to train AI agents in 3D environments with extremely realistic ambiance. The team claims that this tool can be used to initiate more articulated AI activities to navigate to a sound-emitting goal, to learn from echolocation, or even to explore with multimodal sensors.

Using sound, the researchers wrote, will lead to faster learning and more reliable navigation at inference. This will also allow the agent to explore the target from afar on its own. They launched SoundSpaces, audio rendering for 3D environments, with the assistance of Facebook Reality Laboratories.

Navigate Through Your Hall

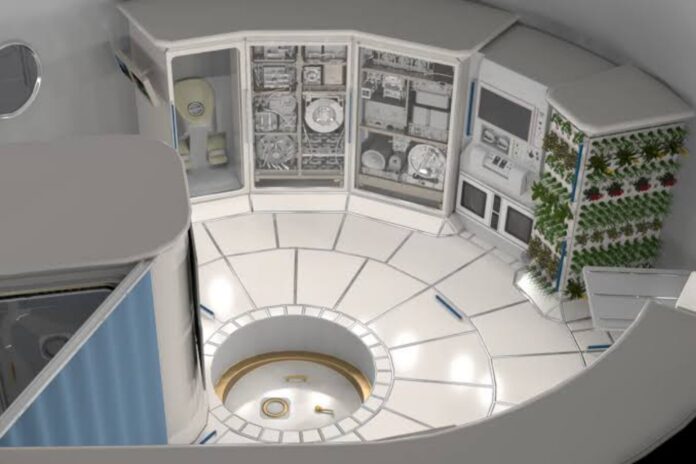

The FAIR team has built Semantic MapNet, an end-to-end learning platform for creating top-down semantic maps. This concept can be used to demonstrate where the objects are positioned based on egocentric observations. Semantic MapNets can help agents to learn and understand how to navigate. E.g., in the future, where virtual real estate agents are a thing, buyers may need a tour to experience the reality.

For Embodied AI Simulations

Habitat-Lab is a feature of the AI Habitat Simulation System for embodied AI Study. Habitat Lab is a versatile high-level library for end-to-end AI development — defining AI-engineered activities such as navigation, questioning and responding, etc. This library helps to configure embedded agents (physical type, sensors, capabilities), educate agents (through imitation or reinforcement learning), and test their success on specified tasks using validated tools.

For a long time now, Facebook AI has invested heavily in developing smart AI systems that can think, schedule, and justify the real world. By integrating embodied AI systems with their in-house state-of-the-art 3D deep learning software, the group aims to further develop the perception of objects and locations. The team’s commitment is to the implementation of next-gen AI technology to derive the best possible results.

The reinforcement learning approach has been adopted so that the embodied agent can independently explore the affordance geography of a new, unmapped 3D world. It has generated a new benchmark for quicker mapping in unknown environments and has Improved the way autonomous robots execute simulation orders by using disembodied large-scale image captioning data sets. It is expected to benefit more in the coming days with the improvement in the infrastructure.