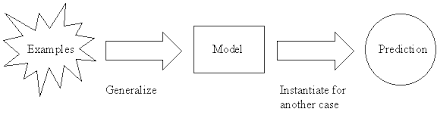

The current learning algorithms which mostly has been making mechanism or assumptions by addition of some restrictions on the space of hypotheses or we can say it to be an underlying model space. Such as mechanism is termed as Inductive Bias also known as Learning Bias.

The mechanism does encourage learning of algorithms that can prioritise solutions which as specific properties. Simply putting it forward learning bias or inductive bias can be defined as a set of either implicit or explicit assumptions that are made by the machine learning algorithms which then generalise a set of training data.

Mentioned below are 5 Inductive Biases in Deep Learning Models:

1.Structured Perception and Relational Reasoning:

These are the types of bias that have been introduced into deep reinforcement learning architectures by the likes of researchers at DeepMind in the year 2019. This approach seems to improve the overall performance along with learning efficiency, the generalisation and also the deep interpretability of the deep RL models.

2.Group Equivariance:

This is a very good inductive bias, especially for deep convolutional networks. These work in convolutional neural networks and is an inductive bias that helps mostly in the generalisation of the networks. They also reduce the sample complexity mainly by exploitation of the symmetries that are present in the networks.

3.Spectral Inductive Bias:

Spectral bias is also a type of inductive bias which not only manifest itself in the very process of learning but also is able in the manifestation of itself in the field of parameterisation of the model. As per research data released in the year 2019 being led by Yoshua Benigo. The lower frequencies are first learned in this type of bias.

4.Spatial Inductive Bias:

This type of bias which is CNNs (Convolutional Neural Networks) which assumes a certain type of the spatial structure which is present in the data. As per the researcher’s data, this data can be used in a model that happens to be non-connectionist techniques. They can also be very introduced with making very minor changes to the algorithms and there is no need for the external imposition of partition.

5.Invariance and Equivariance Bias:

These are used mainly to encode the structure of the model for relational data. They notify the behaviour of a model undergoing various transformations.

Biases may keep on changing and evolving but these 5 biases have so far been able to stay relevant in deep learning models.